29th August 2012

The people who are working in the programming of the Addicted2Random tool met all together for the first time in Barcelona. Here are the outcomes of the first they of work.

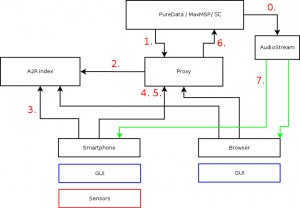

Pre: 0. Audio backend (Puredata/MaxMSP/SuperCollider) gets started, it transmitts an audio stream

1. The audio backend inform the proxy that it got started. It tells, what kind of gui elements it can handle, how they have to be mapped to its input data, chooses a proxy-algorithm (preselect) to calculate the element input data and where the audio stream can be found.

2. The proxy server registers its new a2r session at a central a2r index server

3. Someone starts the a2r client. The client asks the central a2r index server which sessions are active and can be joined.

4. The clients chooses a session and asks the corresponding proxy which gui elements has to make available and where the stream can be found.

5. The user chooses the gui element he/she wants to play with and starts interacting. The a2r client calculates sensor data from the smartphone sensors and the interaction of the user and transmits this to the proxy.

6. The proxy collects the input data from the different clients and calculates meaningful output with the help of the selected proxy-algorithm.

7. All clients listen to the output stream (or better fm radio / live session because of latency).

– Communication protocol:

The communication protocol between the patches and the server aims to be a guideline for the users that want to programme a patch compatible with the a2r server.

The protocol defines what kind of information (metadata) the patch will have to send for running on the server and which variables (sensors) the user will be able to modified.

The protocol also allows to implement variables groups for developing more complex interfaces in the future.